A week ago, I removed all packages relating to Java from my system. I thought I had absolutely no need of them until I tried to minify CSS, via Makefile, using YUI Compressor which is written in Java. The same situation would have happened when I need to minify JavaScript files, I use Google Closure Compiler, same, written in Java.

Oh, right, thats why I still kept the Java runtime environment, the open source one, IcedTea, I thought to myself.

At this point, you can bet that I want to get rid of Java for real. For IcedTea binary package, its about 30+ MB plus near 9 MB of YUI Compressor and Google Closure Compiler. To be fair, they dont use a lot of space, but I just dont like to have Java on my system since there are only two programs need it. Besides, in order to have IcedTea installed, it pulls two virtual packages and two more packages for configurations.

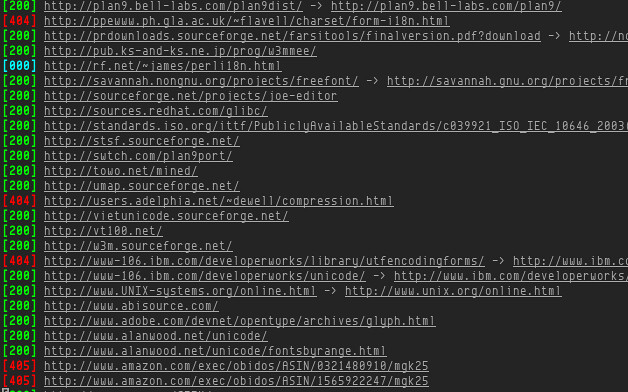

So, my choice was to use online ones as I already know Google hosted one at http://closure-compiler.appspot.com/ and there is also a popular Online YUI Compressor hosted by Mike Horn.

Its only a matter of commands for achieving Java-less, using curl, first one is for YUI Compressor, second one is for Google Closure Compiler:

curl -L -F type=CSS -F redirect=1 -F 'compressfile[]=input.css; filename=input.css' -o output.min.css http://refresh-sf.com/yui/ curl --data output_info=compiled_code --data-urlencode js_codeinput.js http://closure-compiler.appspot.com/compile > output.min.js

Replace the input and output filenames for yours. If you need to pipe, then use - instead of inputfile, - indicates the content comes from standard input.

Although YUI Compressor can also minify JavaScript, however I found Google Closure Compiler does better job by only a little. If you use YUI Compressor for JavaScript as well, simply change to type=JS.

Both (Online) YUI Compressor and Google Closure Compiler have some options, you can simply add to the command. It shouldnt be hard since you have a command template to work from. I only use the default compression options, they are good enough for me.